One of the most widely used tools for making informed, data-driven decisions is an A/B test. In fact, marketers who utilize them find that one out of eight A/B tests is shown to drive significant change in metrics. Learn the ins-and-outs of A/B testing in marketing and how to incorporate your results in your marketing campaigns below!

- What Is A/B Testing?

- Why Should You Conduct Split Tests?

- How Does A/B Testing Work?

- What Should You A/B Test?

- When Should You Start A/B Testing?

- How to Set Up A/B Tests

- How to Analyze A/B Test Results

What Is A/B Testing?

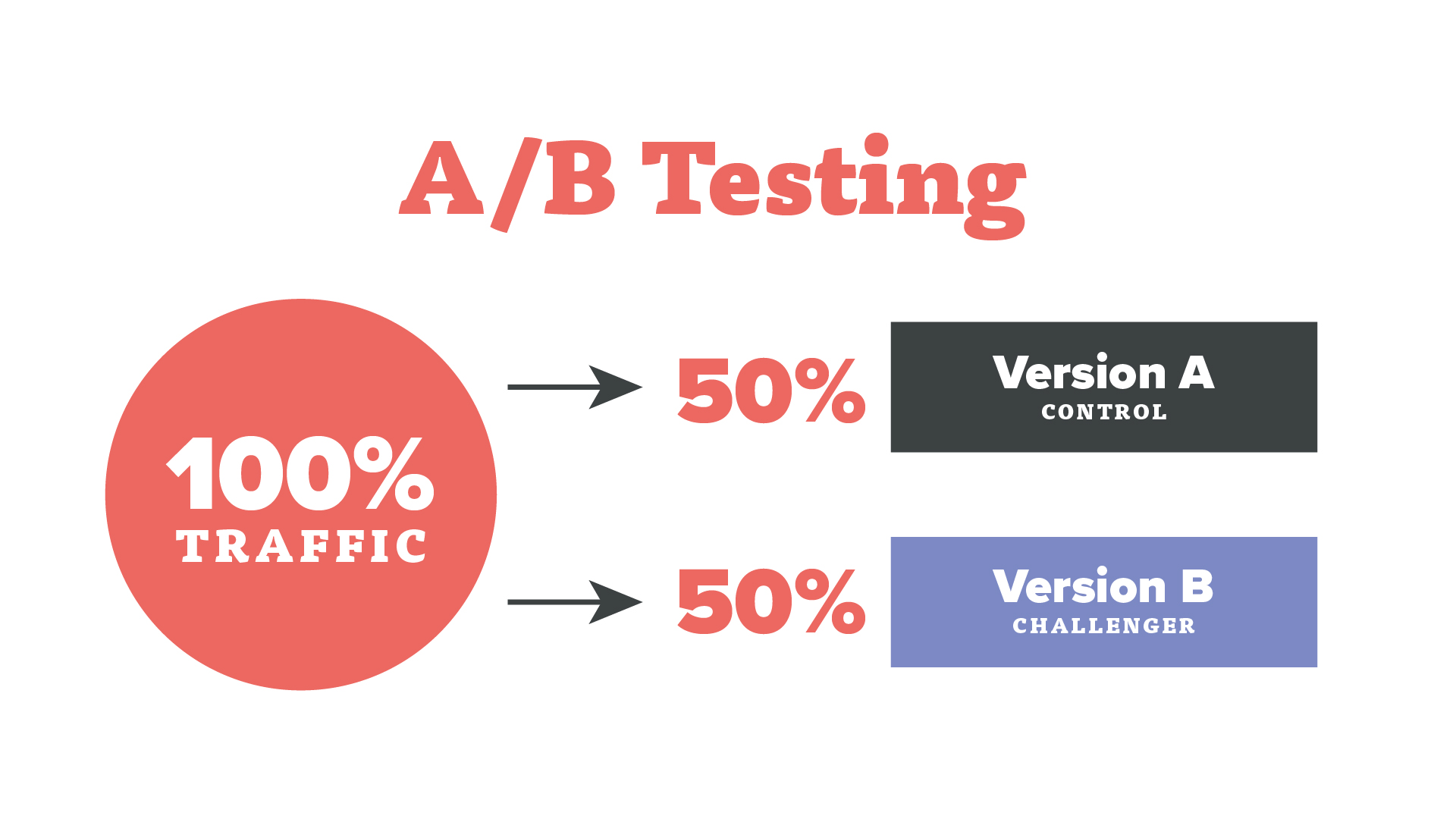

A/B testing is a research technique that marketers will use to determine which version of a variable—the control and challenger—performs better with online audiences. Also called split testing, these two versions of the variable are randomly presented to different segments of your audience so that you can track what had the most impact on the A/B testing goals you’ve set.

Example 1: Title Tag A/B Test

Extra Space Storage’s city pages display all stores within a region to users. Hurrdat Marketing approached Extra Space Storage with a test to optimize title tags to help grow their high-priority city pages.

Hurrdat Marketing created three different title tags for the test, which displayed the lowest price in each city, keyword variations, and how many locations were in a city. No other changes were made to the pages other than the title tags. Although all test groups performed better than the original, the version showing the lowest price in the city had a 60% traffic increase with a 138% conversion rate growth. These results were then applied to the city pages sitewide.

Example 2: Email Pop-Up A/B Test

Frontier Jackson is a provider of CBD products and was having trouble convincing first-time customers to make a purchase. Hurrdat Marketing suggested offering product discounts when users signed up for the email newsletter, then ran A/B tests on different types of pop-ups, like timed, exit intent, and inactivity, on a continual basis.

In just one year, Frontier Jackson’s email subscribers went from zero to over 1,000. Primary pop-ups saw a conversion rate of 5.89% and 9.29%, along with a 40% open rate on automated emails.

Why Should You Conduct Split Tests?

A/B testing allows marketers to make user-focused, data-driven decisions that can help improve several important website metrics, make email outreach content more appealing, increase your social media engagement, and more. Plus, because split tests are user-focused, you’re able to implement solutions that authentically resonate with your audience, ultimately leading to a bigger marketing ROI.

Here are some benefits of incorporating A/B testing into your marketing strategy:

- Learn what page elements engage your audience

- Solve customer pain points

- Improve user experience

- Maximize existing traffic to help increase conversions

- Reduce bounce rates on your website and email, paid, and social campaigns

- Optimize the performance of your website, emails, advertisements, and social media accounts

- Try out low-risk modifications for your marketing channels to help increase future business gains

- Use data to make strategic decisions on any changes you make

- Attain statistically notable improvements for all of your channels

How Does A/B Testing Work?

Before you begin the A/B testing process, identify what website, paid, or social metrics you want to track and see where you may be falling short. Some ways to gather data on user behavior include using tools like heat maps and session recordings. With this user data, set clear A/B testing goals by looking at which metrics you want to improve. You’ll also need to determine what key performance indicators to track during and after your A/B tests.

Here are some common KPIs to keep track of during A/B Testing:

- Conversion Rate

- Click-through-rate

- Bounce rate

- Engagement rate

- Audience growth rate

- Amplification rate

- Social media reach

- Impressions

- Cost-per-click

- Video views

In addition to keeping track of KPIs, you’ll need to identify a single variable from your user data to focus on for your A/B test—this can be a fresh idea or inspired from a website audit or email, paid, or social marketing campaign audit. The new variation of whatever you’re testing will be the challenger and the original version is the control. After a set testing period, your results will reveal a winner: The version of your variable that drove your business metrics in a positive direction. Even after you’ve implemented these changes, you’ll still want to moderate results.

What Should You A/B Test?

After deciding your A/B testing marketing goal you’ll need to establish what you’re testing. While you can test any element in an A/B split test, focus on items that impact your conversions and user experience.

Create an A/B test hypothesis to shape the test. Your hypothesis needs to address a problem, propose a solution, and indicate the predicted impact of the change. You should only choose one variable to measure per split A/B test to know exactly what was responsible for the change in your conversions.

Here are some elements you can experiment with in A/B tests:

- Headers

- Images

- Social media posting times

- Types of content

- Content format

- Body copy

- Subject lines

- Design & layout

- Navigation

- Contact forms

- CTAs

- Content Length

When Should You Start A/B Testing?

There is never a wrong time to implement A/B testing into your marketing timeline for email, social, paid, and web design strategies. The end goal for any A/B test is to drive more traffic, which can lead to higher conversion rates through increased sales, higher social media engagement, clicks, and more. Here are some instances where an A/B test might prove most beneficial.

- Trying out new features and design changes

- Optimizing for a specific goal

- Making data-based decisions

When Not to Run an A/B Test

Though A/B testing is beneficial to improving your marketing campaigns, there are some instances when you should not use a split test and look to other evaluation methods.

If your user base is too small and not reflective of the audience you’re trying to test, you won’t have enough data for statistically significant results and meaningful conclusions, so the changes you make to your platform may not be the most effective option. The most convenient way to calculate your desired sampling size is to use a sampling size calculator, which can help determine appropriate audience size and test length.

Additionally, you have to determine if running an A/B test is worth it. If you’re considering testing an element that’s already a proven industry standard—like a “contact us” button on each page—consider analyzing your data for a new variable. Or if you don’t have time to monitor your results, adjust variables, and implement changes from the winner, you might wait until you can dedicate more focus to the tests.

How to Set Up A/B Tests

Now that you’ve defined what you want to test, you’ll need to run the test for a set period to get results. Aside from getting A/B testing software, there are several steps in the process you need to follow to get reliable test results. Here are the steps on how to do A/B testing!

- Analyze Visitor Data: Collect data on how users interact with your website, emails, social media accounts, and paid advertisements. This can be done through the use of heat maps and session recordings to see how users navigate your online channels.

- Identify a Variable to Test: Select an area for improvement based on your data and use this to create a hypothesis.

- Create a Hypothesis based on Visitor Data: Create a solid hypothesis that addresses the problem, proposes a change to solve the problem, and states an estimated impact of the change.

- Define Testing Period: Most A/B tests will run around 2-8 weeks, but your testing length will depend on your unique testing goals.

- Run Split Test(s): Select and run your variations through A/B testing software like Adobe Target, AB Tasty, VWO, or another platform for the defined testing period.

- Analyze Test Results: Evaluate test results and determine if you should implement the change or extend the testing period.

How to Analyze A/B Test Results

It may sound obvious, but it’s important to remember the amount of data you’ll need to sift through is correlated to your test period. Your split test results will probably give you a winner, as well as key insights into user behavior on your online platforms. When interpreting your A/B test results, remember to consider the following factors:

- Sample Size: The larger the sample size the more data you’ll have to work with and draw conclusions from. If you didn’t get much traffic during the original results, you won’t have much information to go off.

- Statistical Significance: You’re looking for a 1% difference minimum. A 5% difference between your versions is worth noting as a statistical significance, indicating you can trust the test results!

- Compare Your Results to Your Goal Metric: Did either variation hit that goal? It’s completely normal for a test to not support your hypothesis, but make sure to note your results down.

- Segment Your Audience Based on Results: Segmenting your audience is key to understanding how each area of your audience responds to your variations. Some common ways to segment your users are by visitor type, devices used, and traffic source.

Need assistance with your digital marketing strategy? Hurrdat offers digital marketing services that can help your business get found online, improve UX, and increase conversions. Contact us today to learn more!